I updated my Duplicity backups script for my Linux systems. Iterm mac downloadeversouth. I use different backup destinations for each server. Home servers backup to my main home server via sftp. My main home server and my AWS host backup to Backblaze B2 cloud, which is one of the most affordable solutions out there, when combined with Duplicity Incremental backups.

Older versions of Ubuntu and Homebrew have older, incompatible versions of duplicity and possibly python. About A duplicity backend for BackBlaze's B2 file storage.

- Duplicacy is a cloud backup tool based on the concept of lock-free de-duplication across multiple computers. All computers being backed up with Duplicacy upload to a single Backblaze B2 bucket. Any duplicate chunks from any of these computers are only stored once in Backblaze B2, achieving the highest level of de-duplication.

- When running Duplicity to backup to B2 (Backblaze) it fails with the following error: Attempt 1 failed. AttributeError: B2ProgressListener instance has no attribute 'exit' Attempt 2 failed.

- As I understand it Duplicity backs up files by producing encrypted tar-format volumes and uploading them to a remote location, including Backblaze B2. I found this how-to on the BackBlaze website. Where exactly are you having a problem?

I set things up so a new full backup is created the first backup of each month. Depending on volatility, you can run as many incremental backups over the month as you like. I stick with one per day.

Amazon S3 has been around for more than ten years now and I have been happily using it for offsite backups of my servers for a long time. Backblaze’s cloud backup service has been around for about the same length of time and I have been happily using it for offsite backups of my laptop, also for a long time.

In September 2015, Backblaze launched a new product, B2 Cloud Storage, and while S3 standard pricing is pretty cheap (Glacier is even cheaper) B2 claims to be “the lowest cost high performance cloud storage in the world”. The first 10 GB of storage is free as is the first 1 GB of daily downloads. Leapfrog viewer for mac. For my small server backup requirements this sounds perfect.

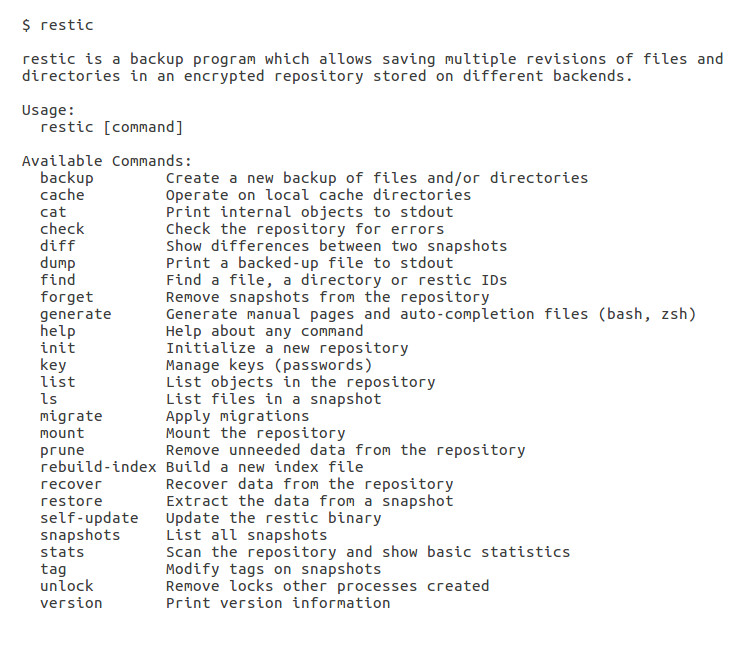

My backup tool of choice is duplicity, a command line backup tool that supports encrypted archives and a whole load of different storage services, including S3 and B2. It was a simple matter to create a new bucket on B2 and update my backup script to send the data to Backblaze instead of Amazon.

Here is a simple backup script that uses duplicity to keep one month’s worth of backups. In this example we dump a few MySQL databases but it could easily be expanded to back up any other data you wish.

2 4 6 8 10 12 14 16 18 20 22 24 26 28 30 32 34 36 38 40 42 44 46 48 50 | ######################################################################## # Uses duplicity (http://duplicity.nongnu.org/) # Run this daily and keep 1 month's worth of backups ######################################################################## # b2 variables B2_APPLICATION_KEY=application_key ENCRYPT_KEY=gpg_key_id DB_USER='root' DATABASES=(my_db_1 my_db_2 my_db_3) # Working directory ######################################################################## # Make the working directory # Dump the databases fordatabasein${DATABASES[@]};do mysqldump-u$DB_USER-p$DB_PASS$database>$WORKING_DIR/$database.sql duplicity--full-if-older-than7D--encrypt-key='$ENCRYPT_KEY'$WORKING_DIRb2://$B2_ACCOUNT_ID:$B2_APPLICATION_KEY@$BUCKET # Verify duplicity verify--encrypt-key='$ENCRYPT_KEY'b2://$B2_ACCOUNT_ID:$B2_APPLICATION_KEY@$BUCKET$WORKING_DIR # Cleanup duplicity remove-older-than30D--force--encrypt-key=$ENCRYPT_KEYb2://$B2_ACCOUNT_ID:$B2_APPLICATION_KEY@$BUCKET # Remove the working directory |

Run this via a cron job, something like this: